Creating a 'pooled' dependency injection lifetime : Andrew Lock

by: Andrew Lock

blow post content copied from Andrew Lock | .NET Escapades

click here to view original post

This post follows on from my previous post, in which I discussed some theoretical/experimental dependency-injection lifetimes, based on the discussion in an episode of The Breakpoint Show. In the previous post I provided an overview of the built-in Dependency Injection lifetimes, and described the additional proposed lifetimes: tenant, pooled, and drifter.

The previous post provided a overview of each of these proposed lifetimes, and an implementation of the drifter (time-based) lifetime. In this post I provide an example of the "pooled" lifetime.

Dependency injection lifetimes

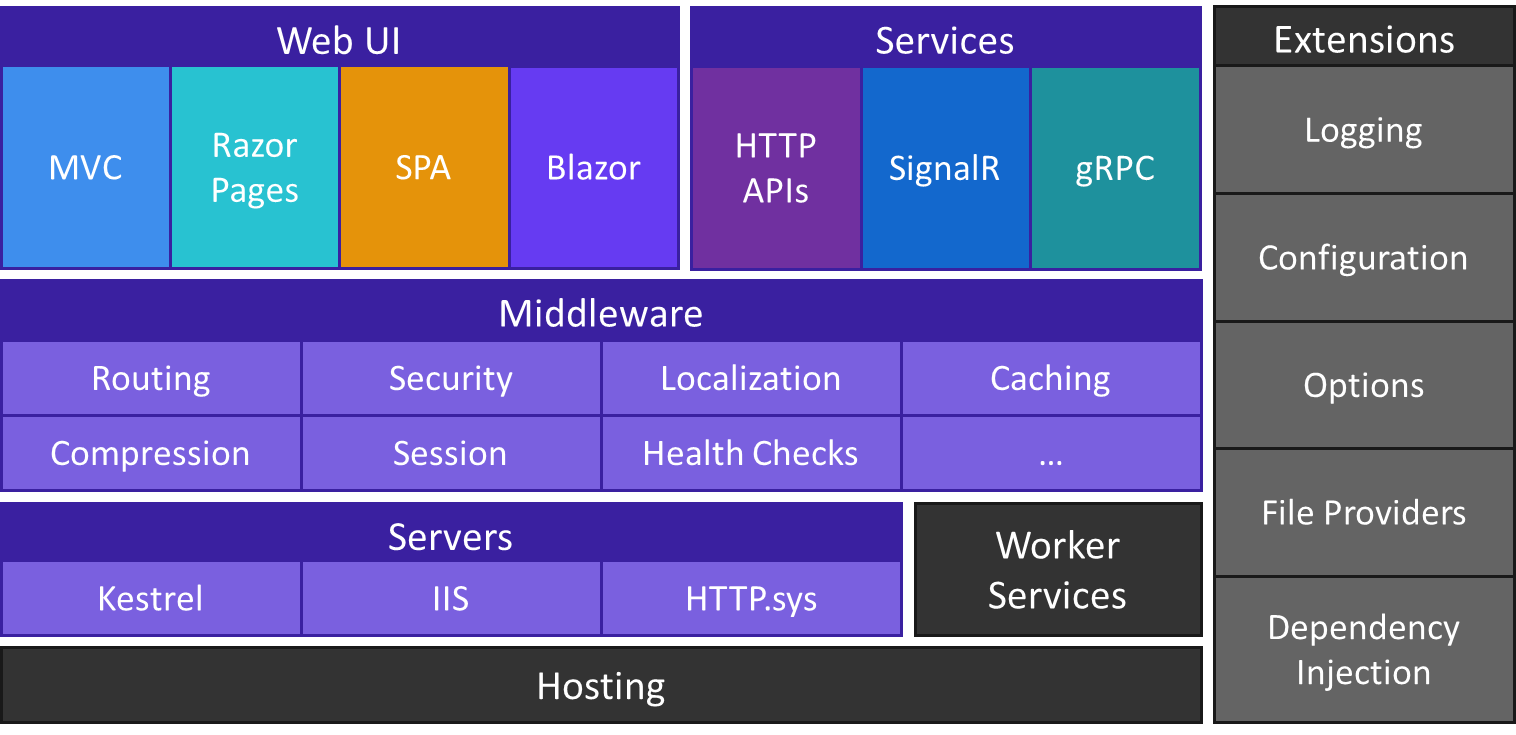

This post is intended as a direct follow-on from the previous post in which I provide the full context, but I'll provide a brief outline of the premise here. When you register services in the .NET Core DI container, you choose one of three different lifetimes.

- Singleton

- Scoped

- Transient

The lifetime you specify controls how and when the DI container chooses to create a new instance of a given service, and when it instead returns an already-existing instance of the service:

- Singleton—only a single instance is ever created

- Scoped—a new instance is created once per "scope" (typically per request)

- Transient—a new instance is created every time it's needed

For a full introduction to dependency injection in .NET Core, see the Microsoft documentation, or chapters 8 and 9 of my book.

In episode 36 of The Breakpoint Show, Khalid, Maarten, and Woody discuss the three lifetimes I described above, providing some examples of when you might choose each one, problems to watch out for, and other things to consider.

Throughout the show, they also discuss the desire for three "additional" types of services, which didn't quite fit into the standard lifetimes:

- Tenant-scoped services—effectively per-tenant singletons

- Time-based (drifter) services—singleton services that are replaced periodically

- Pooled services—reuses a "pool" of services

You can read one way to implement tenant-scoped services in this blog series from Michael McKenna and I showed an implementation of the time-based/drifter lifetime in my previous post. In this post I look at one possible implementation of a pooled lifetime.

Pooled lifetime requirements

Woody mentioned pooling in the podcast episode as a way to reduce allocations and thereby improve performance. The pooled lifetime (inspired by EF Core's DbContext pooling feature) would make this capability a general DI feature, allowing pooling of "arbitrary" services.

In general, I considered the requirements for this feature to be:

- Pooled services should have "scoped" semantics, i.e. when a pooled instance is used, it should be used for the whole request scope, and should not be used by parallel requests.

- When a pooled service is requested, the DI should use a pooled instance first, if available. If no pooled instances are available, the DI container should create a new instance.

- When the scope is disposed, pooled services should be returned to the pool.

- The DI container should pool a maximum of

Ninstances of the service. When an instance is returned, if there are alreadyNinstances in the pool, the returned instance should be discarded (callingDispose()if required). - Pooled services must implement the

IResettableServiceinterface, which contains a singleResetmethod. - When an instance is returned to the pool, the pool should call

Reset()on the instance. This must reset the instance, so that it's safe to reuse in another request. - Other than

IResettableService, there should be no other "additional" requirements on the pooled service. - If a service implements

IDisposable, it must be disposed if it is not returned to the pool.

I considered those to be the main requirements, but to make my proof of concept implementation a bit easier, I added a few anti-requirements:

- It's permissible to return a "wrapper" type to access the pooled service (similar to how the

IOptions<>abstraction works in ASP.NET Core). - Ignore

asyncrequirements for now i.e. no need for aResetAsync()or supportingIAsyncDisposable. - Don't worry about configuration of the pool i.e. allow a "fixed" maximum size for the pool.

That covers the majority of the important behaviour points. In the next section, I'll show an example implementation that meets all of these.

Note that I chose not to use

ObjectPool<T>to explore this. Not for any principled reason, I was just hoping to have a slightly different API. You can see a similar implementation that usesObjectPool<T>in the Microsoft docs.

Implementing a pooled lifetime service

There are 5 different moving parts in the implementation:

IResettableService—The interface that resettable services must implementIPooledService<T>—The interface used to access an instance of itemT(analogous toIOptions<T>)PooledService<T>—The internal implementation ofIPooledService<T>DependencyPool<T>—The pooling implementation, responsible for the rent and return ofIPooledService<T>PoolingExtensions—Helper methods for adding the required services to the DI container.

Now let's look at each of these in turn

IResettableService

This is the only requirement on the pooled service itself, and there's only one method to implement, Reset():

public interface IResettableService

{

void Reset();

}

When called, Reset() should reset the service to its "original" state, so that it can be reused when it's removed from the pool. If we compare this to DbContext (the original inspiration for the pooling idea), this is where the change tracking would be reset, for example.

IPooledService<T>

The IPooledService<T> interface is how the application primarily retrieves instances of a pooled service T. It's the type that you inject into your service constructors, and it's how you access instances of T, similar to how IOptions<T> works for configuration:

public interface IPooledService<out T>

where T : IResettableService

{

T Value { get; }

}

As you can see from the above, the service T must implement IResettableService and can be accessed via the Value property. For example, you might use it in a dependent service like this:

public class DependentService

{

private readonly IMyService _myService;

public DependentService(IPooledService<IMyService> pooled)

{

// Extract the value from the IPooledService<T>

_myService = pooled.Value;

}

}

This level of indirection is a bit annoying from a practical point of view, but I found it to be a necessary evil to create a generalised pooling lifetime. All the alternatives I could come up with that wouldn't require IPooledService<T> instead required the IResettableService to do a lot more work, which I wanted to avoid for this implementation.

PooledService<T>

PooledService<T> is the internal implementation of IPooledService<T>:

internal class PooledService<T> : IPooledService<T>, IDisposable

where T : IResettableService

{

private readonly DependencyPool<T> _pool;

public PooledService(DependencyPool<T> pool)

{

_pool = pool;

// Rent a service from the pool

Value = _pool.Rent();

}

public T Value { get; }

void IDisposable.Dispose()

{

// When the PooledService<T> is disposed,

// the service is returned to the pool

_pool.Return(Value);

}

}

As you can see from the code above, the PooledService<T> implementation depends on the DependencyPool<T>, which we'll look into in detail shortly. The service rents an instance of T from the pool in the constructor. The DI container will then automatically dispose the PooledService<T> when the service scope ends, which returns the service T to the pool.

DependencyPool<T>

The DependencyPool<T> instance is where the bulk of the work happens for the implementation. The DependencyPool<T> is responsible for:

- Creating new instances of the service

<T>if there are none in the pool. - Adding returned services to the pool.

- Returning pooled services when available.

- Disposing services that can't be added to the pool.

internal class DependencyPool<T>(IServiceProvider provider) : IDisposable

where T : IResettableService

{

private int _count = 0; // The number of instances in the pool

private int _maxPoolSize = 3; // TODO: Set via options

private readonly ConcurrentQueue<T> _pool = new();

private readonly Func<T> _factory = () => ActivatorUtilities.CreateInstance<T>(provider);

public T Rent()

{

// Try to retrieve an item from the pool

if (_pool.TryDequeue(out var service))

{

// the

Interlocked.Decrement(ref _count);

return service;

}

// No services in the pool

return _factory();

}

public void Return(T service)

{

if (Interlocked.Increment(ref _count) <= _maxPoolSize)

{

// there was space in the pool, so reset and return the service

service.Reset();

_pool.Enqueue(service);

}

else

{

// The maximum pool size has been exceeded

// We incremented when attempting to return, so reverse that

Interlocked.Decrement(ref _count);

(service as IDisposable)?.Dispose();

}

}

public void Dispose()

{

// If the pool itself is disposed, dispose all the pooled services

_maxPoolSize = 0;

while (_pool.TryDequeue(out var service))

{

(service as IDisposable)?.Dispose();

}

}

}

The implementation is relatively simple, but with a couple of interesting points:

- An

IServiceProvideris passed in the constructor, and is used withActivatorUtilities.CreateInstance()to create a "factory"Func<T>for creating instances of the serviceT. - The number of pooled instances is stored in

_countand is stored separately fromConcurrentQueue<T>. The use ofInterlocked.Increment()andInterlocked.Decrement()ensure we don't exceed the maximum pool size. - The maximum pool size in the above implementation is fixed at

3but that could easily be made configurable. - When the

DependencyPool<T>itself is disposed, the pool ensures no more instances can be rented, and disposes all currently pooled instances.

PoolingExtensions

We've pretty much covered all the moving parts now, the one last step is to register everything in the DI container. We only have two services we need to register here, DependencyPool<T> and IPooledService<T>

public static class PoolingExtensions

{

public static IServiceCollection AddScopedPooling<T>(this IServiceCollection services)

where T : class, IResettableService

{

services.TryAddSingleton<DependencyPool<T>>();

services.TryAddScoped<IPooledService<T>, PooledService<T>>();

return services;

}

}

Note that we don't register T itself as a service that you can directly pull from the container; you always need to retrieve an IPooledService<T> and access the T by calling Value. You might think that you could handle this automatically in the container, doing something like the following:

services.AddScoped(s => s.GetRequiredService<IPooledService<T>>().Value);

But unfortunately, that doesn't work. By having the DI container return the T directly, the DI container will automatically dispose the T when the scope ends. That's not what we want for pooled services—the pooled service will be handed out again, and we don't want it to be disposed, we rather want it to be reset.

A reminder that an alternative to this implementation would be to use the

ObjectPool<T>implementation, as described in the Microsoft docs.

Ok, we have a complete implementation, time to take it for a spin!

Testing the implementation

To test the service I'm using a similar test service as I used in the previous post, where each instance gets a different ID for its lifetime, so that we can easily see when new instances are created:

public class TestService : IResettableService, IDisposable

{

private static int _id = 0;

public int Id { get; } = Interlocked.Increment(ref _id);

public void Dispose() => Console.WriteLine($"Disposing service: {Id}");

public void Reset() => Console.WriteLine($"Resetting service: {Id}");

}

In addition, I've added some basic logs to the Reset() and Dispose() methods so we can more easily track what's going on.

To test it out, I created a small console app. The app creates a ServiceCollection, builds an IServiceProvider and then generates a bunch of scopes in parallel. For each scope, it retrieves a TestService instance, and print its Id. It then disposes all the scopes. We then run the same sequence again:

var collection = new ServiceCollection();

collection.AddScopedPooling<TestService>();

var services = collection.BuildServiceProvider();

Console.WriteLine("Generating scopes A")

GenerateScopes(services);

Console.WriteLine()

Console.WriteLine("Generating scopes B")

GenerateScopes(services);

static void GenerateScopes(IServiceProvider services)

{

var count = 5;

List<IServiceScope> scopes = new(count);

// Simulate 5 parallel requests

for (int i = 0; i < count; i++)

{

// Create a scope, but don't dispose it yet

var scope = services.CreateScope();

scopes.Add(scope);

// Retrieve an instance of the pooled service

var service = scope.ServiceProvider.GetRequiredService<IPooledService<TestService>>().Value;

Console.WriteLine($"Received service: {service.Id}");

}

foreach (var scope in scopes)

{

scope.Dispose();

}

}

When we run this code, we get the following sequence:

Generating scopes A

Received value: 1

Received value: 2

Received value: 3

Received value: 4

Received value: 5

Resetting service: 1

Resetting service: 2

Resetting service: 3

Disposing service: 4

Disposing service: 5

Generating scopes B

Received value: 1

Received value: 2

Received value: 3

Received value: 6

Received value: 7

Resetting service: 1

Resetting service: 2

Resetting service: 3

Disposing service: 6

Disposing service: 7

By looking at the Ids printed, we can see this works as expected:

- 5 new instances are created to satisfy the 5 parallel scopes.

- When the scopes are disposed, the maximum 3 instances are reset and stored in the pool. The remaining two services are disposed as they can't be pooled.

- When the sequence is run again, the first 3 requests use pooled instances. The remaining two instances must be created, giving Ids

6and7. - When the scopes are disposed, again the 3 instances are pooled, and the remaining 2 are disposed.

So it seems the implementation is working as expected, but I think it's worth thinking about the limitations and considering whether you should use something like this.

Limitations in the pooled lifetime implementation

The inspiration for the pooling lifetime was EF Core's pooling of DbContext, but the above implementation is fundamentally a bit different. The DbContext was designed to "know" about pooling, and its internal implementation has knowledge of DbContextLease which tracks the origin of DbContext instances etc. That stands in contrast to the above implementation in which the implementation class doesn't need to know about the pooling details (other than providing a Reset() implementation).

The net result of the DbContext implementation is that the usage of DbContext is identical, whether or not you use pooling. That again contrasts with the implementation in this post, in which you must use the IPooledService<T> intermediate abstraction.

Another thing to consider in the above implementation is that your pooled services can't depend on scoped services, even though the services behave somewhat like scoped services themselves. That's because the instances "live" longer than a single service scope, so the only lifetime that really makes sense for dependencies is singleton, or possibly transient.

Yet another aspect to consider is that the pool is very simplistic. It's first-come first-served in both renting and return. Once the items are in the pool, they're there to stay until they rented again. You could improve all that of course, but as you make things more complicated, you risk removing the benefits that pooling could bring.

Is pooling actually useful?

The original inspiration for the pooling lifetime was EF Core's DbContext pooling, for which a single-threaded benchmark shows that pooling can improve performance and reduce allocation:

| Method | NumBlogs | Mean | Error | StdDev | Gen 0 | Gen 1 | Gen 2 | Allocated |

|---|---|---|---|---|---|---|---|---|

| WithoutContextPooling | 1 | 701.6 us | 26.62 us | 78.48 us | 11.7188 | - | - | 50.38 KB |

| WithContextPooling | 1 | 350.1 us | 6.80 us | 14.64 us | 0.9766 | - | - | 4.63 KB |

However, just because it is beneficial overall for EF Core, doesn't necessarily mean it will always be beneficial. This is explicitly called out by Microsoft in a blog post discussing pooling of ValueTask instances, back in .NET 5

In employing such a pool, the developer is betting that they can implement a custom allocator (which is really what a pool is) that’s better than the general-purpose GC allocator. Beating the GC is not trivial. But, a developer might be able to, given knowledge they have of their specific scenario.

Memory allocation in .NET is very efficient; the runtime is fast at allocating memory and is even quick at cleaning up small objects. So although pooling means the allocator generally doesn't need to run as much, that's not really where you're getting performance improvements.

One possible source of performance improvements from pooling can come when the objects being allocated are large. Large objects are generally more expensive for the GC, because the more memory allocated, the more often the GC has to run, and the more work it has to do (to zero out the memory etc).

Pooling can also provide an advantage if constructing the objects is expensive. This could be because the constructor itself does a bunch of work. It could also be because the DI container doesn't need to calculate and reconstruct the full dependency graph for the object every time it's requested. Or it could be because you need to use a limited OS resource.

However, there are interesting problems to think about here, which could undermine any garbage collection improvements you might expect to see from pooling.

First of all, if the Reset() method has to do more work than the GC would in collecting it, then you've immediately lost any advantage you could expect to get from pooling. But there's an even more subtle issue.

The .NET GC is a generational garbage collector. Newly allocated objects are placed in Gen 0, and can typically be quickly cleaned up. If a given object survives a garbage collection because it is still in use, it is promoted to Gen 1. The longest living objects are eventually promoted to Gen 2.

In general, the higher the GC generation, the more expensive it is to clean up. Ideally the GC tries to scan all the Gen 0 objects to see if they're still alive. This works well because generally Gen 0 objects reference other Gen 0 objects. Where things get tricky is if a Gen 2 object has a reference to a Gen 0 object. Then suddenly the GC needs to check the Gen 2 objects to find out if that Gen 0 object can be collected. And that's a lot more expensive.

And what does pooling do? It makes objects live longer, so they end up in Gen 2. If those objects hold references to short-lived objects…then suddenly you've made GCs much more expensive.😬

These issues (and others) are discussed by Stephen Toub with Scott Hanselman in the Deep .NET video on ArrayPool.

So in conclusion: should you use this? Probably not, but I enjoyed exploring it 😀

Summary

This post followed on from my previous post, in which I discussed some theoretical/experimental dependency-injection lifetimes, based on the discussion in an episode of The Breakpoint Show. In this post I presented a possible implementation of a "pooled" lifetime, which could be used with arbitrary services. The implementation has a bunch of limitations that I dig into, and use it as an excuse to discuss whether pooling of generic objects makes sense or not.

April 29, 2025 at 02:30PM

Click here for more details...

=============================

The original post is available in Andrew Lock | .NET Escapades by Andrew Lock

this post has been published as it is through automation. Automation script brings all the top bloggers post under a single umbrella.

The purpose of this blog, Follow the top Salesforce bloggers and collect all blogs in a single place through automation.

============================

Post a Comment